Old School NLP Technologies - Automata, Transducers, etc

Wishing you a happy new year 2022. It’s almost as if 2021 never happened.

Old School Technologies are still relevant

Baselines in AI projects are used to estimate the difficulty of a problem and set performance targets. For example, you start a project by testing out rules & basic ML algorithms like Logistic Regression, SVM, etc and then scale to Neural Networks in the phase-2 of the project.

To keep things in perspective

Do you know that it is tough to build a Neural Networks that can perform a simple “ADD” operation. You need to pump-in too many examples. Why not just use a rule? Why are we even training a NeuralNet for it?

And we can mutually agree that Neural Networks are data hungry. So, at times, it is best to rely on rule-based solutions for economical and efficiency reasons.

Automata & Transducers

Automata

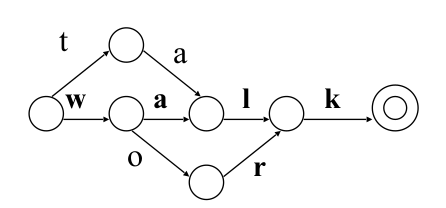

Let us assume that you need to search for a word from a vocabulary list. Here, you can build a hashmap for each word for easy access. Instead, you can also build a automata from words i.e. look at the figure below,

From the figure, we can notice that automata merges the common paths and makes it easy to parse the words. We can perform different operations easily,

For example,

- We can find words that start with “w” - there are only 2 paths from “w” -> walk and work.

- Finding valid words. If “watj” is given as input, it won’t reach the final state. It will stop after “w” & “a”. Hence, not a valid word.

- In Finding common suffixes. In our case, “-lk”.

Infact, the famous regex expressions library we use in our programming languages are based on the same logic.

Transducers

Transducers are an extension to Automata where they “map” one string of symbols to a different string of symbols. They are used for different purposes.

For example, pluralizing words

frog: frogs

bus: buses

goose: geese

...

Another best things about Transducer is that you can reverse them. For example, you can de-pluralize/singularize words just by reversing the above transducer.

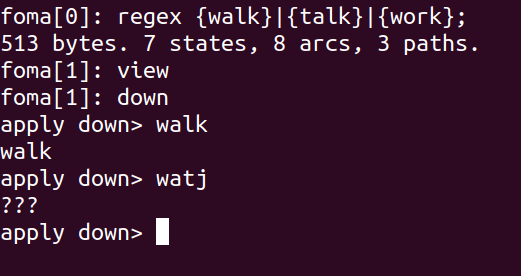

FOMA: The tool

FOMA is a useful tool in implementing Automata & Transducers. Fun fact, it is developed by my current professor Prof. Mans Hulden. You can install it from the instructions here.

If you want to store your vocabulary as an automata, you can do the following (Figure 3). You can search for valid words by the command down .

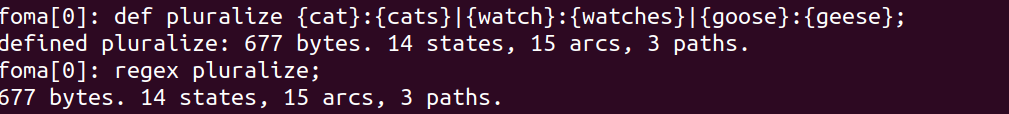

When it comes to transducer, we declare {input}:{output}.

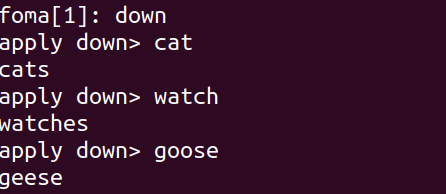

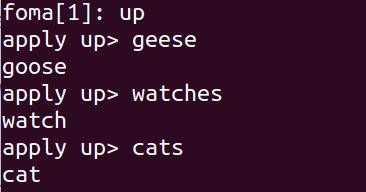

If you want to pluralize, you use the same command down.

If you want to de-pluralize, you reverse it. So, logically, the command is up. Now, input expected is a plural word and output will be its singular form.

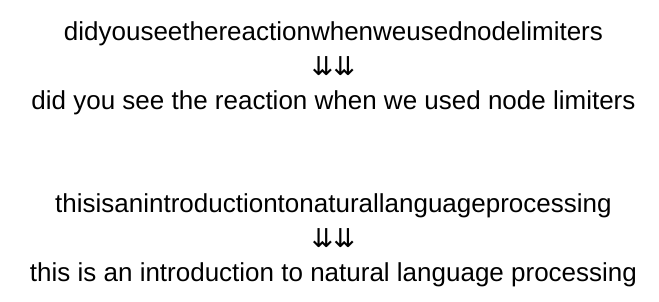

The task of Making sense: Segmentation Problem

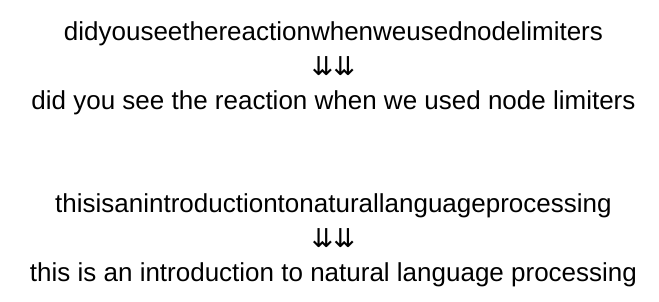

Let’s consider a task where your input is a sentence with no space between the words. Now, how do you approach this problem? Let’s do this with FOMA. I’ll also discuss how to approach it with Dynamic programming & Neural methods later.

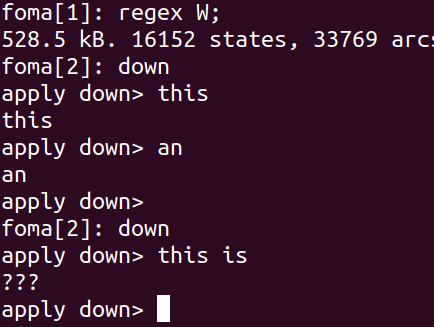

Let’s load the list of english words first and store them in W;

read text engwords.txt

def W;

where engwords.txt is

word1

word2

word3

...

Now, our W is an automata. We can check the words by down. The input can only be one word. After one word, we reach the final state, thus stops parsing the input. You can see that it doesn’t validate input with more than one word.

In order to accept multi word input, we need to make some changes.

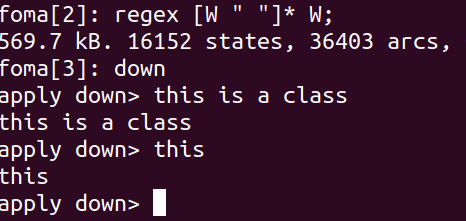

regex [W " "]* W;

In the above command, “*“ means 0 or more occurences. So, the expression means any number of “word followed by space” and one word. This ensures that input is atleast one word.

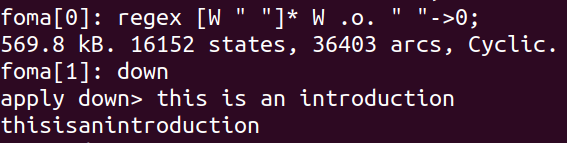

In Transducer, you can build modules. Our final transducer is a sequence of two modules i.e operations.

- The first module accepts multi-word input.

- In our second module, we remove spaces.

If you notice, with down command, we are removing spaces between words which is the exact opposite of what we are trying to do.

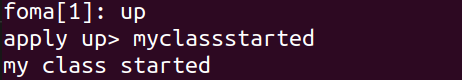

Incase you didn’t get it yet, that’s the final solution. Well, do you remember the “reversing transducer” up command. When we reverse, the first operation is reversed second module i.e add spaces in every combination. Next, the first module is used as a filter to allow sequences which have only valid words.

Other solutions:

- Word Ninja is also a non-ML solution based on dynamic programming. More details here.

Leave a comment