Delineating my NLP experience

Tools:

- Foundational NLP tools: I have used NLTK, Spacy, Gensim, Stanford CoreNLP suite. With the advent of Transformers and rise of pretrained language models, I consider it would be redundant mentioning them but anyway, here we go.

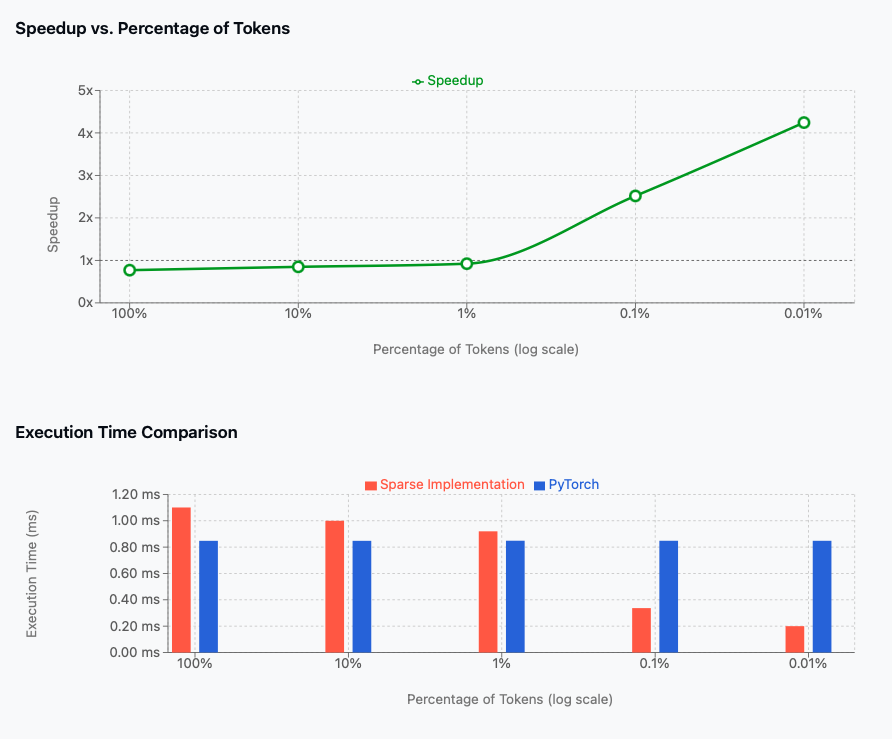

- Deep Learning Platforms: I’m a constant advisor of PyTorch. But at the same time, I couldn’t avoid mandatory Tensorflow/Keras assignments in my Masters. I use FastAI toolkit for learning purposes.

- NLP Libraries: I have used HuggingFace Transformers, AllenNLP, Flair, and OpenNMT. OpenNMT for building machine translation baselines for my undergrad thesis. HuggingFace Transformers is something I use in my most of the recent projects. AllenNLP for coreference resolution and Flair for sentiment analysis and NER.

- NLP Architectures: I am up to date with the recent transformer variants and language models (Auto-regressive and Denoising based models) BERT, RoBERTa, DeBerta, XLNet, T5, BART, GPT-1,2,3, CLIP etc. And at the same time, if there is any need to use any of the older techniques, dating back to automata, I can do that too. Here’s my mini blog explaining how to use FOMA tool (automata library) for a downstream NLP task.

Techniques:

- Machine Translation: I have built baselines for machine translation using OpenNMT. At the same time, my thesis was on unsupervised machine translation. Here, on the top of standard back translation approach, we proposed that leveraging a lexicon built from cross-lingual embeddings would greatly impact the convergence of training. I have also used HuggingFace Transformers for fine-tuning the models for my recent college projects.

Pharamacuetical giant, Novartis, wanted to have a in-house translation service as they didn’t want to rely on Google Translate citing privacy concerns. So, I built a streamlit webapp around HelsinkiNLP models and deployed it on their servers. This was also a great learning experience as I got to learn about the deployment of models in production.

- Text Classification: This has been the most popular and common task in NLP. Starting from 2017, I have used different techniques for text classification. I have used CNNs, RNNs, LSTMs, GRUs, Transformers, etc. I have also used different techniques for feature engineering like TF-IDF, Word2Vec, GloVe, FastText, etc.

There has been different variants to it. For example, in a course project of building stance detection in Covid vaccine, we combined Twitter network features with text encoder features. Trust me on this, this is an interesting read (mainly, the user network features computation). Here’s the link. The professor, Prof. Rodrigo Agerri wanted me to publish this work with some tweaks but I was busy shifting to Netherlands for 2nd year of my Masters and couldn’t work on it further.

There was also something called sentiment neuron from OpenAI, where they use one node’s activation value to decide the sentiment value of text. I built a wrapper around it and passed it to the labeling team in my former company, Gramener.

-

Named Entity Recognition: The 2nd most used task in NLP. Starting with BiLSTM CRF model as my baseline in 2018, I have now experimented (successfully) pivoting NER task as seq2seq task with generative models like BART, T5, etc. In Industry, while building a patient-deidentification app for Novartis, I have used Spacy, SciSpacy, ClinicalBERT, BioBERT, AllenNLP, Flair etc.

-

Coreference Resolution: Again, for the same project in Novartis, we used AllenNLP’s models for coreference resolution of patient/subject instances.

-

Question Answering: In my recent efforts to pivot NER task into multiple other tasks, I have used BERT based model for this question answering task.

-

Math Word Problem Solving: This is my current thesis. Here, we will be dealing with seq2seq, sequence2tree and graph2tree models, which leverage different attention mechanisms and architectures like Graph Attention Networks, Graph Convolutional Networks, etc.

-

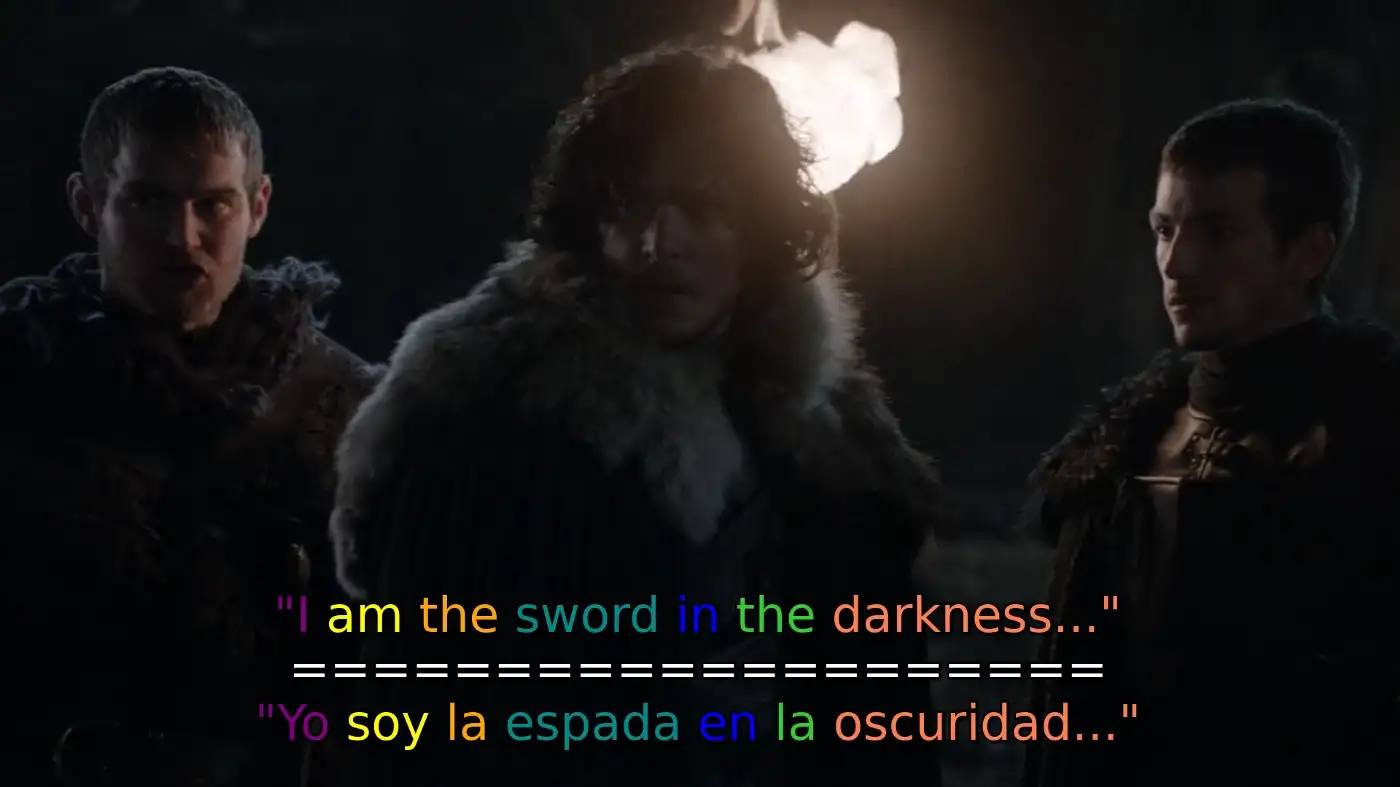

Unsupervised Word Alignment: I wrote a few project proposals to Netflix and Duolingo based on this idea. Here’s a sample image of the application I built for them. Note that there is no training involved. This is completely zero shot.

-

Multi-modal work: We built a MEME retrieval system using CLIP, sentence embeddings and presented our poster. This is again a very interesting work. Here’s the link. We also built a demo but it’s down considering the costs.

Reading skills:

I believe I should point my ability to read research papers. As a proof, here’s the folder with all the annotated research papers I read in the last month. Reading 23 research papers (with 4 survey papers) in a month would require some understanding of NLP techniques. I also summarize my papers visually for advisors. An example file is here

Writing skills:

Before starting my masters, I maintained a technical blog where I wrote about my projects. I didn’t highlight it in the interview. Here’s the link. There are medium posts on Unsupervised Translation and Generative Adversarial Networks (GANs).

Here’s my latest course work paper. You will find it interesting. Explainable NLP could have been my thesis topic but I pivoted to Math Word Problem Solving.

Coding skills:

My current part time work at AskUI has made my code much more efficient. Here’s a recent repository I am maintaing. You can see the code modularity, unittests and readability. But since the emphasis is on NLP, I will not go into much details.

Leave a comment